Please contact @legalaware if you would like to tweet constructively with the author about the ideas contained herewith.

The “purpose” of an air plane crash investigation is apparently as set out in the tweet below:

It seems appropriate to extend the “lessons from the aviation industry” in approaching the issue of how to approach blame and patient safety in the NHS. Dr Kevin Fong, NHS consultant at UCHL NHS Foundation Trust in anaesthetics amongst many other specialties, highlighted this week in his excellent BBC Horizon programme how an abnormal cognitive reaction to failure can often make management of patient safety issues in real time more difficult. Approaches to management in the real world have long made the distinction between “managers” and “leaders” and it is useful to consider what the rôle of both types of NHS employees might be, particularly given the political drive for ‘better leadership’ in the NHS.

In corporates, reasons for ‘denial about failure’ are well established (e.g. Richard Farson and Ralph Keyes writing in the Harvard Business Review, August 2002):

“While companies are beginning to accept the value of failure in the abstract-at the level of corporate policies, processes, and practices-it’s an entirely different matter at the personal level. Everyone hates to fail. We assume, rationally or not, that we’ll suffer embarrassment and a loss of esteem and stature. And nowhere is the fear of failure more intense and debilitating than in the competitive world of business, where a mistake can mean losing a bonus, a promotion, or even a job.”

Farson and Keyes (2011) identify early-on for potential benefits of “failure-tolerant leaders”:

“Of course, there are failures and there are failures. Some mistakes are lethal-producing and marketing a dysfunctional car tire, for example. At no time can management be casual about issues of health and safety. But encouraging failure doesn’t mean abandoning supervision, quality control, or respect for sound practices, just the opposite. Managing for failure requires executives to be more engaged, not less. Although mistakes are inevitable when launching innovation initiatives, management cannot abdicate its responsibility to assess the nature of the failures. Some are excusable errors; others are simply the result of sloppiness. Those willing to take a close look at what happened and why can usually tell the difference. Failure-tolerant leaders identify excusable mistakes and approach them as outcomes to be examined, understood, and built upon. They often ask simple but illuminating questions when a project falls short of its goals:

- Was the project designed conscientiously, or was it carelessly organized?

- Could the failure have been prevented with more thorough research or consultation?

- Was the project a collaborative process, or did those involved resist useful input from colleagues or fail to inform interested parties of their progress?

- Did the project remain true to its goals, or did it appear to be driven solely by personal interests?

- Were projections of risks, costs, and timing honest or deceptive?

- Were the same mistakes made repeatedly?”

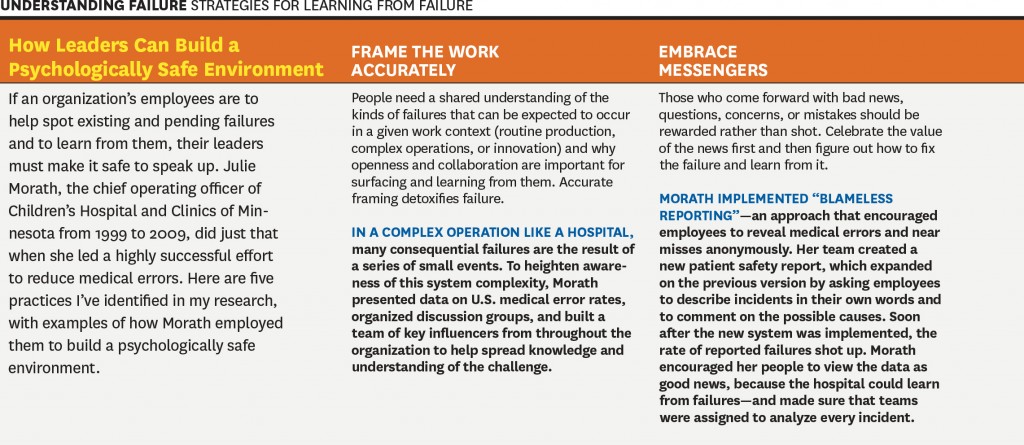

It is incredibly difficult to identify who is ‘accountable’ or ‘responsible’ for potential failures in patient safety in the NHS: is it David Nicholson, as widely discussed, or any of the Secretaries of States for health? There is a mentality in the popular media to try to find someone who is responsible for this policy, and potentially the need to attach blame can be a barrier to learning from failure. For example, Amy C Edmondson also in the Harvard Business Review writes:

“The wisdom of learning from failure is incontrovertible. Yet organizations that do it well are extraordinarily rare. This gap is not due to a lack of commitment to learning. Managers in the vast majority of enterprises that I have studied over the past 20 years—pharmaceutical, financial services, product design, telecommunications, and construction companies, hospitals, and NASA’s space shuttle program, among others—genuinely wanted to help their organizations learn from failures to improve future performance. In some cases they and their teams had devoted many hours to after-action reviews, post mortems, and the like. But time after time I saw that these painstaking efforts led to no real change. The reason: Those managers were thinking about failure the wrong way.”

Learning from failure is of course extremely important in the corporate sectors, and some of the lessons might be productively transposed to the NHS too. This is from the same article:

However, is this is a cultural issue or a leadership issue? Michael Leonard and Allan Frankel in an excellent “thought paper” from the Health Foundation begin to address this issue:

“A robust safety culture is the combination of attitudes and behaviours that best manages the inevitable dangers created when humans, who are inherently fallible, work in extraordinarily complex environments. The combination, epitomised by healthcare, is a lethal brew.

Great leaders know how to wield attitudinal and behavioural norms to best protect against these risks. These include: 1) psychological safety that ensures speaking up is not associated with being perceived as ignorant, incompetent, critical or disruptive (leaders must create an environment where no one is hesitant to voice a concern and caregivers know that they will be treated with respect when they do); 2) organisational fairness, where caregivers know that they are accountable for being capable, conscientious and not engaging in unsafe behaviour, but are not held accountable for system failures; and 3) a learning system where engaged leaders hear patients and front-line caregivers’ concerns regarding defects that interfere with the delivery of safe care, and promote improvement to increase safety and reduce waste. Leaders are the keepers and guardians of these attitudinal norms and the learning system.”

Whatever the debate about which measure accurately describes mortality in the NHS, it is clear that there is potentially an issue in some NHS trusts on a case-by-case issue (see for example this transcript of “File on 4″‘s “Dangerous hospitals”), prompting further investigation through Sir Bruce Keogh’s “hit list“) Whilst headlines stating dramatic statistics are definitely unhelpful, such as “Another nine hospital trusts with suspiciously high death rates are to be investigated, it was revealed today”, there is definitely something to investigate here.

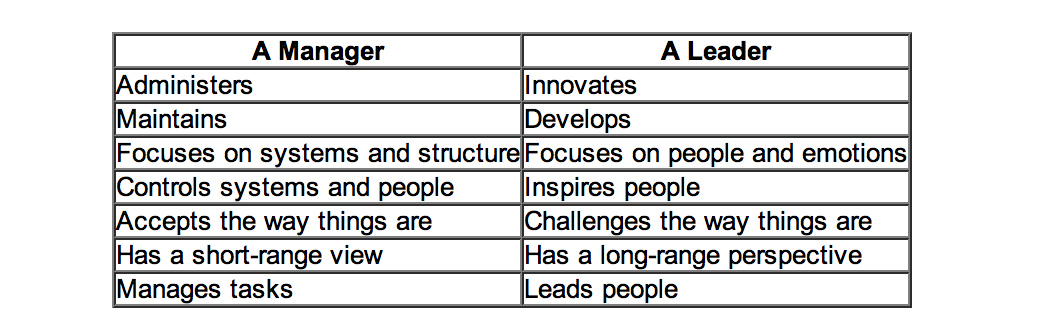

Is this even a leadership or management thing? One of the most famous distinctions between managers and leaders was made by Warren Bennis, a professor at the University of Southern California. Bennis famously believes that, “Managers do things right but leaders do the right things”. It is argued that doing the right thing, however, is a much more philosophical concept and makes us think about the future, about vision and dreams: this is a trait of a leader. Bennis goes on to compare these thoughts in more detail, the table below is based on his work:

Indeed, people are currently scrabbling around now for “A new style of leadership for the NHS” as described in this Guardian article here.

Is patient safety predominantly a question of “teamwork”?

Amalberti et al. (2005) make some interesting observations about teamwork and professionalism:

“A growing movement toward educating health care professionals in teamwork and strict regulations have reduced the autonomy of health care professionals and thereby improved safety in health care. But the barrier of too much autonomy cannot be overcome completely when teamwork must extend across departments or geographic areas, such as among hospital wards or departments. For example, unforeseen personal or technical circumstances sometimes cause a surgery to start and end well beyond schedule. The operating room may be organized in teams to face such a change in plan, but the ward awaiting the patient’s return is not part of the team and may be unprepared. The surgeon and the anesthesiologist must adopt a much broader representation of the system that includes anticipation of problems for others and moderation of goals, among other factors. Systemic thinking and anticipation of the consequences of processes across depart- ments remain a major challenge.”

Weisner et al. (2010) have indeed observed that:

“Medical teams are generally autocratic, with even more extreme authority gradient in some developing countries, so there is little opportunity for error catching due to cross-check. A checklist is ‘a formal list used to identify, schedule, compare or verify a group of elements or… used as a visual or oral aid that enables the user to overcome the limitations of short-term human memory’. The use of checklists in health care is increasingly common. One of the first widely publicized checklists was for the insertion of central venous catheters. This checklist, in addition to other team-building exercises, helped significantly decrease the central line infection rate per 1000 catheter days from 2.7 at baseline to zero.”

M. van Beuzekom et al. (2013) and colleagues, additionally, describe an interesting example from the Netherlands. Teams in healthcare are co-incidentally formed, similar to airline crews. The teams consist of members of several different disciplines that work together for that particular operation or the whole operating day. This task-oriented team model with high levels of specialization has historically focused on technical expertise and performance of members with little emphasis on interpersonal behaviour and teamwork. In this model, communication is informally learned and developed with experience. This places a substantial demand on the non-clinical skills of the team members, especially in high-demand situations like crises.

Bleetman et al. (2011) mention that, “whenever aviation is cited as an example of effective team management to the healthcare audience, there is an almost audible sigh.” Debriefing is the final teamwork behaviour that closes the loop and facilitates both teamwork and learning. Sustaining these team behaviours depends on the ability to capture information from front-line caregivers and take action. In aviation, briefings are a ‘must-do’ are not an optional extra. They are performed before every take-off and every landing. They serve to share the plan for what should happen, what could happen, to distribute the workload efficiently and to prevent and manage unexpected problems. So how could we fit briefings into emergency medicine? Even though staff may be reluctant to leave the computer screen in a busy department, it is likely to be worth assembling the team for a few minutes to provide some order and structure to a busy department and plan the shift.

Briefing points could cover:

- The current situation

- Who is present on the team and their experience level

- Who is best suited to which patients and crises so that the most effective deployment of team members occurs rathe than a haphazard arrangement

- The identification of possible traps and hazards such as staff shortages ahead of time

- Shared opinions and concerns.

The authors describe that, “at the end of the shift a short debriefing is useful to thank staff and identify what went well and what did not. Positive outcomes and initiatives can be agreed.”

Is patient safety predominantly a question of “leadership”?

The literature identifies that overall team members are important who have a good sense of “situational awareness” about the patient safety issue evolving around them. However, it is being increasingly recognised that to provide effective clinical leadership in such situations, the “team leader” needs to develop a certain set of non-clinical skills. This situation demands more than currency in advance paediatric life support or advanced trauma life support; it requires the confidence (underpinned by clinical knowledge) to guide, lead and assimilate information from multiple sources to make quick and sound decisions. The team leader is bound to encounter different personalities, seniority, expectations and behaviours from members of the team, each of whom will have their own insecurities, personality, anxieties and ego.

Amalberti et al. (2005) begin to develop a complex narrative on the relationship between leadership and management (and the patients whom “they serve”):

“Systems have a definite tendency toward constraint. For example, civil aviation restricts pilots in terms of the type of plane they may fly, limits operations on the basis of traffic and weather conditions, and maintains a list of the minimum equipment required before an aircraft can fly. Line pilots are not allowed to exceed these limits even when they are trained and competent. Hence, the flight (product) offered to the client is safe, but it is also often delayed, rerouted, or cancelled. Would health care and patients be willing to follow this trend and reject a surgical procedure under circumstances in which the risks are outside the boundaries of safety? Physicians already accept individual limits on the scope of their maximum performance in the privileging process; societal demand, workforce strategies, and competing demands on leadership will undermine this goal. A hard-line policy may conflict with ethical guidelines that recommend trying all possible measures to save individual patients.”

Conclusion

Even if one decides to blame the pilot of the plane, one has to wonder the extent to which the CEO of the entire airplane organisation might to be blame. The question for the NHS has become: who exactly is the pilot of plane? Is it the CEO of the NHS Foundation Trust, the CEO of NHS England, or even someone else? And rumbling on in this debate is whether the plane has definitely crashed: some relatives of passengers are overall in absolutely no doubt that the plane has crashed, and they indeed have to live with the wreckage daily. Politicians have then to decide whether the pilot ought to resign (has he done something fundamentally wrong?) or has there been something fundamentally much more distal which has gone wrong with his cockpit crew for example? And, whichever figurehead is identified if at all for any problems in this particular flights, should the figurehead be encouraged to work in a culture where problems in flying his plane have been identified and corrected safely? And finally is this is a lone airplane which has crashed (or not crashed), and are there other reports of plane crashes or near-misses to come?

References

Learning from failure

Richard Farson and Ralph Keyes. The Failure Tolerant Leader. Harvard Business Review: August 2002.

Amy Edmondson. Strategies for learning from failure. Harvard Business Review: April 2011.

Patient safety

Amalbert, R, Auroy, Y, Berwick, D, Barach, P. Five System Barriers to Achieving Ultrasafe Health Care Ann Intern Med. 2005;142:756-764.

Bleetman, A, Sanusi, S, Dale, T, Brace. (2012) Human factors and error prevention in emergency medicine. Emerg Med J 2012;29:389e393. doi:10.1136/emj.2010.107698

Federal Aviation Administration. Section 12: Aircraft Checklists for 14 CFR Parts 121/135 iFOFSIMSF.

Pronovost P, Needham D, Berenholtz S et al. (2006) An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med 355:2725–32.

van Beuzekom, M, Boer, F, Akerboom, S, Dahan, A. (2013) Perception of patient safety differs by clinical area and discipline. British Journal of Anaesthesia 110 (1): 107–14 (2013)

Weisner, TG, Haynes, AB, Lashoher, A, Dziekman, G, Moorman, DJ, Berry, WR, Gawande, AA. Perspectives in quality: designing the WHO Surgical Safety Checklist. (2010) International Journal for Quality in Health Care Volume 22, Number 5: pp. 365–370